An API (sort of) for adding links to ArchiveBox

I use ArchiveBox extensively to save web content that might change or disappear. While a REST API is apparently coming eventually, it doesn’t appear to have been merged into the main fork. So I cobbled together a little application to archive links via a POST request. It takes advantage of the archivebox command line interface. If you are impatient, you can skip to the full source code. Otherwise I’ll describe my setup to provide some context.

My ArchiveBox server

The ArchiveBox instance I’m running is on a Debian 12 box on my LAN, one that I use for a host of utility service that I run in my home lab. I installed it in a Docker container. To run the instance:

cd ~/archivebox/data

docker run -v $PWD:/data -p 8000:8000 -it archivebox/archiveboxUsing the ArchiveBox command line interface

To archive a link, we can archivebox add your_url, but since we are running in a docker container, it would be docker exec --user=archivebox CONTAINER_NAME /bin/bash -c archivebox add your_url. This FastAPI application is essentially a wrapper around that CLI functionality.

FastAPI ArchiveBox server API

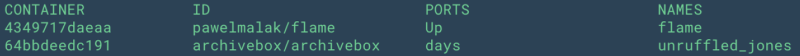

In the full source code below you’ll notice that I have hard-coded the CONTAINER_NAME. Yours may be different and can be found with:

docker ps | awk '

NR == 1 {

# Print the header row

printf "%-20s %-25s %-25s %s\n", $1, $2, $7, $NF

}

$0 ~ /^[a-z0-9]/ {

# Print container details

printf "%-20s %-25s %-25s %s\n", $1, $2, $7, $NF

}'In my case I get:

so that’s what I use for CONTAINER_NAME

Adding a link for archival

To add a link via the /archive POST endpoint:

curl -X POST http://my_ip_address:9000/archive \

-H "Content-Type: application/json" \

-d '{"url": "https://example.com", "tags": ["test"]}'This returns, e.g.:

{

"job_id":"1f532ca8-1466-414e-8d1e-b6fc9fe526b8",

"status":"in_progress","url":"https://example.com",

"start_time":"2024-12-25T05:45:24.541473",

"end_time":null,"duration_seconds":null,

"error":null,

"output":null

}If you want to check the progress of the archival job, you can query with the job_id returned with the submission:

curl http://my_ip_address:9000/status/Full source code

from fastapi import FastAPI, HTTPException, BackgroundTasks

from pydantic import BaseModel, HttpUrl

from typing import List, Dict, Optional

import asyncio

import logging

import uvicorn

from datetime import datetime

import uuid

from collections import defaultdict

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s'

)

logger = logging.getLogger('archivebox-service')

app = FastAPI(title="ArchiveBox Service")

CONTAINER_NAME = "unruffled_jones"

# In-memory storage for job status

jobs: Dict[str, Dict] = defaultdict(dict)

class ArchiveRequest(BaseModel):

url: HttpUrl

tags: List[str] = []

class JobStatus(BaseModel):

job_id: str

status: str

url: str

start_time: datetime

end_time: Optional[datetime] = None

duration_seconds: Optional[float] = None

error: Optional[str] = None

output: Optional[str] = None

async def run_in_container(cmd: List[str]) -> tuple[str, str]:

docker_cmd = [

"docker", "exec",

"--user=archivebox",

CONTAINER_NAME,

"/bin/bash", "-c",

" ".join(cmd)

]

logger.info(f"Running command: {' '.join(docker_cmd)}")

process = await asyncio.create_subprocess_exec(

*docker_cmd,

stdout=asyncio.subprocess.PIPE,

stderr=asyncio.subprocess.PIPE

)

stdout, stderr = await process.communicate()

if process.returncode != 0:

raise Exception(stderr.decode())

return stdout.decode(), stderr.decode()

async def archive_url_task(job_id: str, url: str, tags: List[str]):

"""Background task for archiving URLs"""

try:

cmd = ["archivebox", "add"]

if tags:

tag_str = ",".join(tags)

cmd.extend(["--tag", tag_str])

cmd.append(str(url))

start_time = datetime.now()

stdout, stderr = await run_in_container(cmd)

end_time = datetime.now()

duration = (end_time - start_time).total_seconds()

jobs[job_id].update({

"status": "completed",

"end_time": end_time,

"duration_seconds": duration,

"output": stdout

})

except Exception as e:

jobs[job_id].update({

"status": "failed",

"end_time": datetime.now(),

"error": str(e)

})

logger.error(f"Job {job_id} failed: {e}")

@app.post("/archive", response_model=JobStatus)

async def start_archive(request: ArchiveRequest, background_tasks: BackgroundTasks):

"""Start an archival job and return immediately with a job ID"""

job_id = str(uuid.uuid4())

start_time = datetime.now()

# Initialize job status

jobs[job_id] = {

"job_id": job_id,

"status": "in_progress",

"url": str(request.url),

"start_time": start_time

}

# Schedule the archival task

background_tasks.add_task(archive_url_task, job_id, str(request.url), request.tags)

return JobStatus(**jobs[job_id])

@app.get("/status/{job_id}", response_model=JobStatus)

async def get_job_status(job_id: str):

"""Get the status of a specific job"""

if job_id not in jobs:

raise HTTPException(status_code=404, detail="Job not found")

return JobStatus(**jobs[job_id])

@app.get("/health")

async def health_check():

"""Simple health check endpoint"""

try:

stdout, stderr = await run_in_container(["archivebox", "version"])

return {

"status": "healthy",

"archivebox": "available",

"container": CONTAINER_NAME,

"version": stdout.strip()

}

except Exception as e:

logger.error(f"Health check failed: {str(e)}")

return {"status": "unhealthy", "error": str(e)}

if __name__ == "__main__":

import argparse

parser = argparse.ArgumentParser(description='ArchiveBox API Service')

parser.add_argument('--host', default='0.0.0.0', help='Host to bind to')

parser.add_argument('--port', type=int, default=9000, help='Port to bind to')

parser.add_argument('--container', default=CONTAINER_NAME,

help='Docker container name for ArchiveBox')

parser.add_argument('--log-level', default='INFO',

choices=['DEBUG', 'INFO', 'WARNING', 'ERROR', 'CRITICAL'],

help='Logging level')

args = parser.parse_args()

if args.container:

CONTAINER_NAME = args.container

logger.setLevel(args.log_level)

logger.info(f"Starting ArchiveBox service on {args.host}:{args.port}")

logger.info(f"Using ArchiveBox container: {CONTAINER_NAME}")

uvicorn.run(app, host=args.host, port=args.port)